Software metrics is a set of characteristics allowing one to make an objective evaluation of performance, professional competency, reliability, and other qualities in the aspect of a certain software product.

There are standards that set the bar for software solutions, and launching a product that is below that bar in quality in public use or doesn’t adhere to this type of software metrics would not make any sense—it will either break under the competitive pressure, not get any demand among users, or come out non-profitable for the developer company.

Planning to establish cooperation with some software development partner or another, it is crucial to clarify the standards and metrics its employees adhere to when working on projects before making a deal. Below, we will discuss in detail the importance of these metrics and share a set of software metrics examples and standards we practice.

And remember that software metrics and reliability of your end product are the two concepts that go hand in hand.

Why are Software Metrics Important?

As you might have already understood, the main goal of clarifying characteristics is to define the level of product quality to be provided by your team of developers as a result of their work on a project. If we consider the software quality metrics objectives individually, there will be the following important goals:

- to increase ROI;

- to find ways to optimize the costs & result ratio;

- to decrease the team of developers’ stress-load;

- to decrease the terms of product implementation.

How Come Adhering to the Same Metrics & Standards Results in Products of Different Quality?

Despite the fact that there is a number of commonly-accepted standards and metrics for software solutions (e.g., there is a common method of counting lines of code – LOC), two different development companies may as well get absolutely varying evaluating data.

Thus, there are two evaluation options intended by LOC: When each line is counted and when each block ending in the “return” command is counted. As you can see, these two approaches give different results. In such a way, the metric evaluation will also differ, which makes a comparison of a software product by separate developers’ teams more complicated. That is why some companies implement certain in-house agreements referenced to during the whole product development cycle and the initial software quality metrics overview.

Fortifier Software Metrics Examples & Standards

Experts from the highly-experienced Insurtech development company Fortifier adhere to the original in-house software metrics list and the international development standards EEE 982, IEEE 1091, and ISO/IEC 9126 when creating each and every product. We know firsthand how and why software metrics are important. Therefore, we have introduced a set of readings that help us manage our products’ level of quality, which is subdivided into subsequent groups:

Management

These metrics help to manage the development process, requirements compliance, initial identification of potential data sources, product release preparations, correctness of involved data and resources, etc. It also involves software engineering KPI metrics and goes in total as follows:

- Requirements Traceability

- Software Maturity Index

- Number of Conflicting Requirements

- Number of Entries and Exits per Module

- Software Purity Level

- Requirements Compliance

- Data or Information Flow Complexity

- Software Documentation and Source Listings

- Software Release Readiness

- Completeness

Development

Development metrics allow assessing the complexity of product implementation as well as the complexity and quality of architecture and unit tests, etc. This is the crucial aspect of our software metrics dashboard, which includes:

- Functional or Modular Test Coverage

- Software Science Measures

- Graph-Theoretic Complexity for Architecture

- Cyclomatic Complexity

- Minimal Unit Test Case Determination

- Design Structure

- Test Coverage

Testing

Use these software metrics to define a number of defects and types of existing bugs and flaws, downtime, and monitor the dynamics of these moments throughout the development cycle to also identify the reasonable terms to eradicate any bugs and such. These include:

- Fault Density

- Defect Density

- Cumulative Failure Profile

- Cause and Effect Graphing

- Defect Indices

- Error Distribution(s)

- Man-hours per Major Defect Detected

- Mean Time to Discover the Next K Faults

- Estimated Number of Faults Remaining (by Seeding)

- Residual Fault Count

- Failure Analysis Using Elapsed Time

- Testing Sufficiency

- Mean Time-to-Failure

- Failure Rate

- Test Accuracy

Reliability

Last, but not at all least, software reliability metrics allow one to identify the “sturdiness” rates of the system performance, fail-operational capabilities, etc., as well as the dynamics of these readings, to help one understand how ultimately reliable the end product would be in the long run. This group of metrics consists of:

- Run Reliability

- Reliability Growth Function

- RELY (Required Software Reliability)

- System Performance Reliability

- Independent Process Reliability

- Combined Hardware and Software (System) Operational Availability

Practical Software Metrics Usage Example:

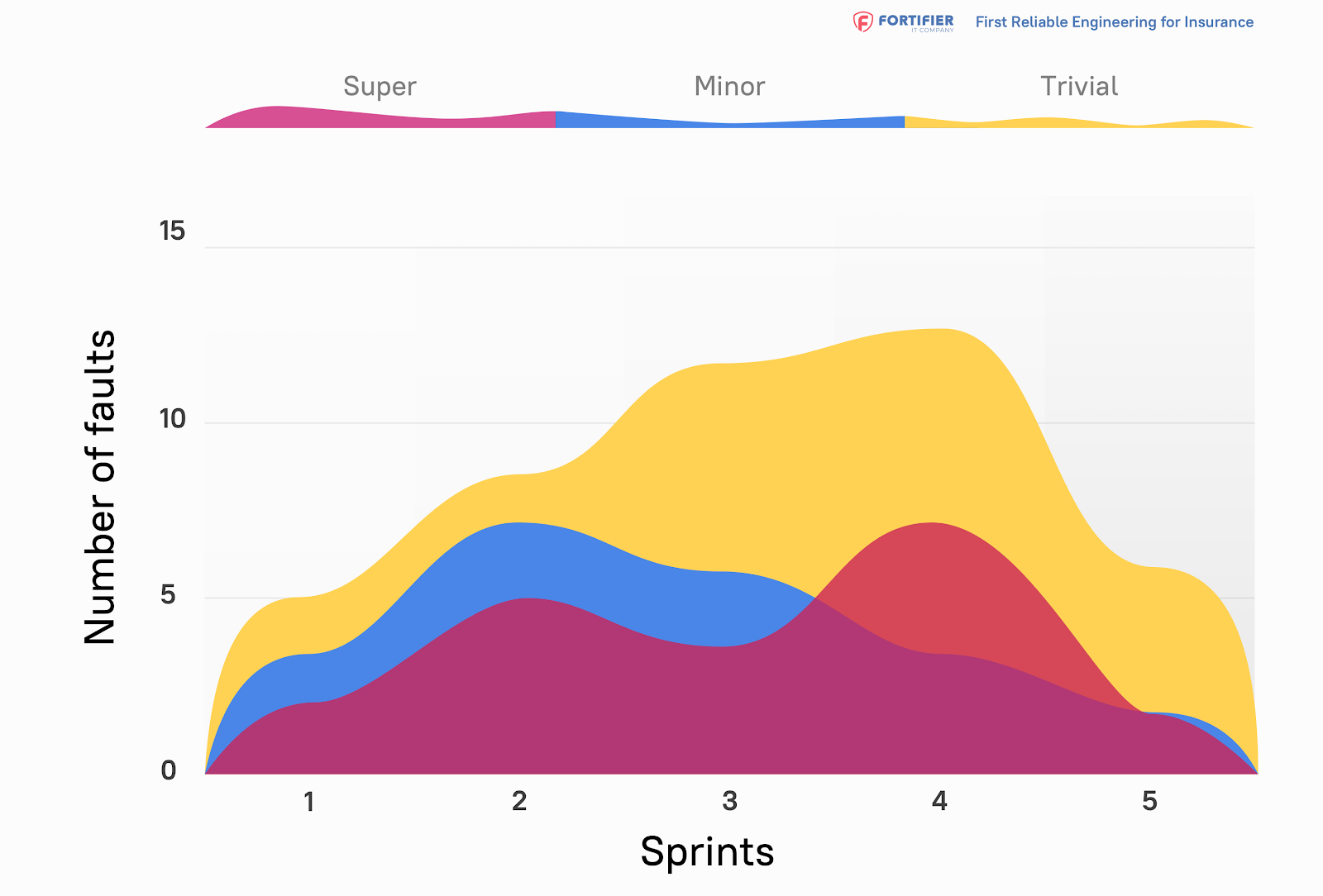

Let’s go through one of the testing metrics to extend your understanding of the need for our basic software metrics classification – Defect Index (DI). This measure provides a continuing, relative index of how correct the software is as it proceeds through the development cycle.

Primitives

Di = total number of defects detected during the i-th phase

Si = number of serious defects found

Mi = number of medium defects found

Ti= number of trivial defects found

Ws / Wm / Wt = weighting factor for super defects (default is 10) weighting factor for minor defects (default is 3) weighting factor for trivial defects (default is 1)

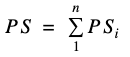

PS = number of features implemented:

PIi = phase defect index

The first step is to calculate a PIi using next formula:

![]()

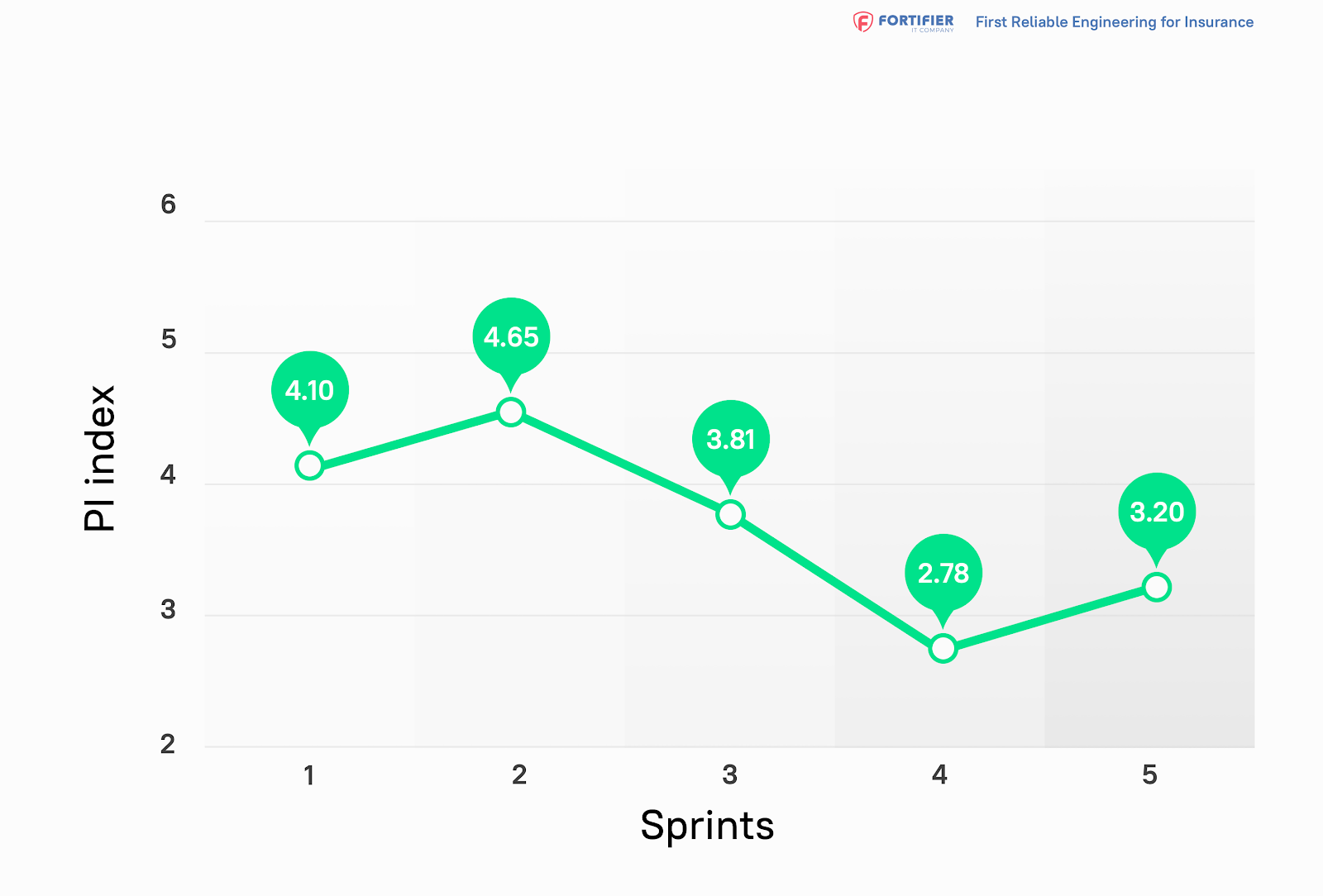

Thus, we can consider the phase index for each sprint and use it as an indicator of the quality of the sprint.

PI result example

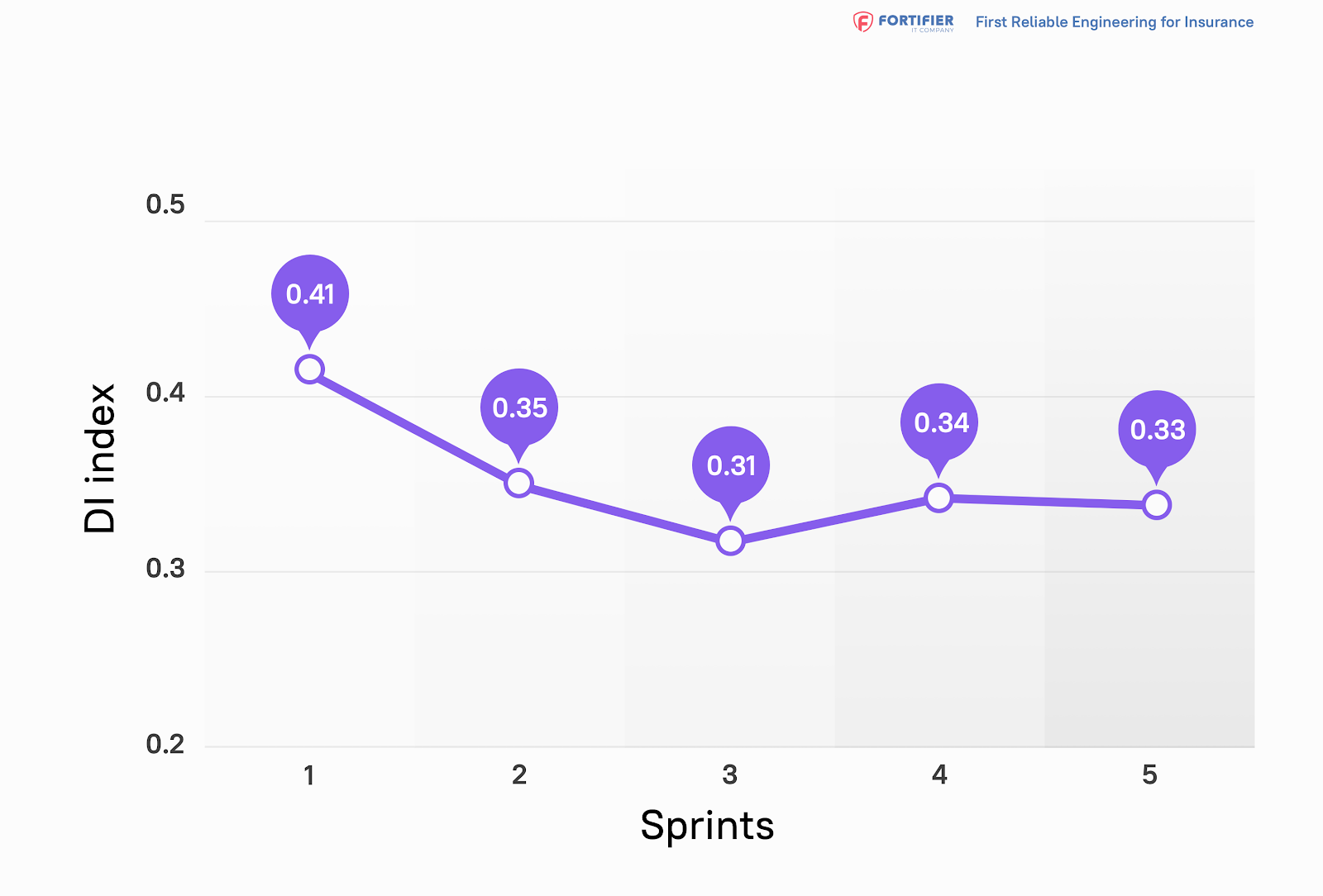

And finally we have all needed indicators to get Defect Index for a project calculated this way:

DI result example

This software metrics-defining measurement may be applied as early in the life cycle as the user has products that can be evaluated.

And this was a brief but essential overview.

How to Properly Formulate One’s Own Set of Standards & Metrics?

In fact, practically every other development company tries to embed the most individually-fitting ways to assess a software product. Nevertheless, we can emphasize a number of aspects all existing metrics and standards should feature:

- they must be uniquely measurable;

- they must not depend on any certain programming language;

- they must be applicable to all kinds of software;

- calculations should be open to precision checks;

- calculations’ margin of error must not be critical to your workflow.

Fortifier Approach

At Fortifier, we focus on the thorough approach to the software building, always delivering competitive, reliable software solutions that correspond with all the implicit quality policies, the latest global trends in the industry, as well as all major software metrics and building models. Let’s work together so that you judge only from your own experience of cooperating with the country’s leading IT experts.